When Apple unveiled Apple Intelligence at its Worldwide Developers Conference, it marked the beginning of a new chapter for the company’s software ecosystem. This ambitious suite of personal intelligence features, deeply integrated into iOS, iPadOS, and macOS, promised to redefine how users interact with their devices. However, the presentation was not the finish line; it was the starting pistol for a multi-stage marathon. The true power and full scope of Apple Intelligence won’t be available on day one. Instead, Apple is embarking on a deliberate, phased rollout strategy, with the next several iOS updates news cycles being critically important for the platform’s development. This methodical approach is essential for tackling the immense technical challenges of deploying AI at scale while upholding Apple’s stringent privacy standards. Understanding this roadmap is key to appreciating the future of the entire Apple ecosystem, from the iPhone in your pocket to the next generation of augmented reality.

The Staged Unveiling: Deconstructing the Apple Intelligence Rollout

Apple’s strategy for Apple Intelligence is not a simple feature drop. It’s a foundational shift that requires careful, iterative deployment. The initial release in iOS 18 is just the first step, setting the stage for more powerful capabilities that will arrive in subsequent point releases like iOS 18.1 and 18.2.

Initial Launch: The “Beta” Phase in iOS 18.0

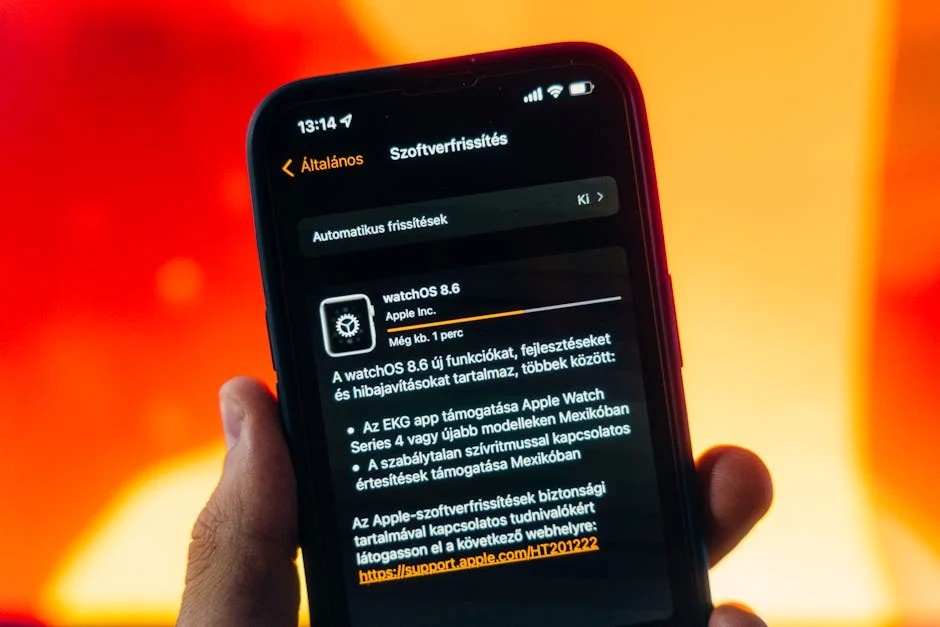

When the first public version of iOS 18 launches this fall, Apple Intelligence will arrive with a “beta” tag. This designation is crucial; it sets user expectations and signals that the system is still evolving. Initially, the feature set will be limited in both scope and availability.

Key features expected at launch include:

- Writing Tools: System-wide access to proofreading, rewriting, and summarizing text in apps like Mail, Notes, and Pages.

- Image Playground: The ability to create generative images in Animation, Sketch, and Illustration styles, available both as a standalone app and within Messages.

- Genmoji: The creation of unique emoji characters based on text descriptions.

- Enhanced Siri: A more natural and contextually aware Siri that understands on-screen content and can perform more complex, multi-step actions within and across apps. This is the most significant Siri news in years.

However, these features will come with significant initial limitations. The most prominent is language support, which will be restricted to U.S. English at the outset. Furthermore, the on-device processing requirements mean that Apple Intelligence will only be available on the iPhone 15 Pro (and newer models) with the A17 Pro chip, as well as iPads and Macs with M-series silicon. This important piece of iPhone news and iPad news underscores the computational power needed for on-device AI. This initial phase allows Apple to test its infrastructure, refine its models with real-world (but anonymized) usage, and prepare for a broader expansion.

The Next Wave: What to Expect in iOS 18.1 and Beyond

The real story of Apple Intelligence will unfold in the updates that follow the initial iOS 18 release. These updates will focus on three key areas: expansion, integration, and refinement.

- Expansion: The most anticipated development will be the addition of new languages and regional support. This is a complex process that goes beyond simple translation, requiring models to be trained on cultural nuances and local contexts.

- Deeper Integration: While the initial release integrates AI into core apps, future updates will see these capabilities woven more deeply into the fabric of the OS. This could mean more sophisticated photo editing tools, proactive suggestions in the Calendar app based on your emails, or even smarter automation in Shortcuts.

- Refinement and New Features: Subsequent updates will bring more advanced features that are still in development. This could include the ability for Siri to process more complex chains of commands, understand video content on your screen, or offer deeper personal context based on your long-term usage patterns. These updates will be crucial for the entire Apple ecosystem news cycle, as each new capability will have a ripple effect across all connected devices.

Under the Hood: The Engineering Challenges of Deploying AI at Scale

Apple’s decision to phase the rollout of Apple Intelligence is not arbitrary; it’s a direct response to the immense technical and ethical challenges of building a personal AI system. The company’s approach is rooted in a hybrid processing model and a deep commitment to user privacy.

On-Device vs. Private Cloud Compute: A Delicate Balancing Act

At the heart of Apple Intelligence is a sophisticated system that decides, on the fly, whether to process a request on your device or send it to a secure server. This balancing act is critical to its success.

- On-Device Processing: For the majority of tasks, Apple Intelligence relies on the powerful Neural Engine in Apple Silicon. This is ideal for tasks that require personal context, such as summarizing your notes or finding a specific photo. The benefits are immense: lightning-fast speed, offline functionality, and, most importantly, unparalleled privacy, as your personal data never leaves your device. This focus on local processing is a cornerstone of recent Apple privacy news.

- Private Cloud Compute: For more complex requests that require larger, more powerful models (like generating a complex image or summarizing a lengthy research paper), the request is seamlessly handed off to Apple’s Private Cloud Compute. This is where the latest iOS security news becomes paramount. Apple has engineered custom servers using its own silicon, designed with a groundbreaking architecture that ensures user data is cryptographically secured and is never stored or made accessible to Apple. The system is designed to be auditable by independent security experts to verify these privacy claims.

Developing and scaling this hybrid system is a monumental engineering feat. It requires robust infrastructure, intelligent load balancing, and a seamless user experience where the transition between on-device and cloud processing is invisible. A phased rollout allows Apple to scale its Private Cloud Compute infrastructure responsibly and fine-tune the logic that governs where each query is handled.

Model Training, Refinement, and Regional Nuances

Large Language Models (LLMs) are not “finished” products. They require constant training, fine-tuning, and adaptation. The models that power Apple Intelligence will be continuously improved based on new data and user feedback. The staggered release schedule allows Apple’s engineering teams to focus their efforts, first on perfecting the U.S. English model and then applying those learnings to other languages and regions. This iterative process is essential for avoiding the biases and inaccuracies that can plague AI models, ensuring the system is helpful and reliable for a global audience.

The Ripple Effect: How Apple Intelligence Will Reshape the Entire Ecosystem

The impact of Apple Intelligence extends far beyond the iPhone. As the platform matures with each iOS update, its capabilities will create a powerful ripple effect, enhancing every product in the Apple ecosystem.

A Smarter Siri for a Connected Home and Life

A more capable, conversational, and context-aware Siri will fundamentally change how we interact with connected devices. The latest HomePod news suggests a future where you can ask your smart speaker complex, multi-part questions about your schedule or have it control smart home devices in a more natural way. The same is true for wearables; the latest Apple Watch news points towards a future where AI can provide proactive health insights by analyzing data directly on your wrist, turning the device into a true personal wellness coach. This will be a major driver of future Apple health news. Even on the go, the experience with wireless audio will improve. The latest AirPods news, particularly for the AirPods Pro news cycle, will likely focus on how a smarter Siri can manage notifications, calls, and media playback with simple, natural language commands, without you ever needing to touch your phone.

The Future of Creativity, Productivity, and Entertainment

Apple Intelligence is poised to become a powerful tool for creators and professionals. The latest Apple Pencil news could soon involve AI-powered features that can clean up sketches, suggest color palettes, or even turn rough notes into structured documents on an iPad. For professionals creating a digital mood board or a family making a vacation plan, future iPad vision board news could highlight tools that use AI to automatically source and arrange images based on a simple description. The entertainment experience on Apple TV news will also evolve, with AI-driven content recommendations and a Siri that can find exactly what you want to watch across all your streaming services with a single voice command. This smarter discovery could even influence Apple TV marketing news as the platform’s intelligence becomes a key selling point.

Integrating the Physical and Digital Worlds

The long-term vision for Apple Intelligence is a seamless blend of our digital and physical lives. This is most evident when considering the future of augmented reality. The latest Apple AR news is intrinsically linked to on-device AI. A more powerful intelligence layer is the foundation for the next generation of Apple Vision Pro news, enabling the device to understand and interact with the world around it in real time. Future Vision Pro accessories news might even include concepts like a Vision Pro wand news item, detailing a physical controller that uses AI to enable more precise spatial interactions. Even smaller accessories will benefit. The latest AirTag news could involve AI predicting where a lost item might be based on your daily routines, making the Find My network even more powerful. This broad impact on all hardware is a major theme in Apple accessories news.

While looking forward, it’s interesting to consider how this new era contrasts with Apple’s past. We are unlikely to see any credible iPod revival news, but the philosophy behind Apple Intelligence—making complex technology personal and intuitive—is a direct descendant of the ethos that made the original iPods, from the iPod Classic news to the simple genius of the iPod Shuffle news, so successful. The spirit of the iPod Nano news, iPod Mini news, and even the iPod Touch news lives on in this relentless pursuit of user-centric design.

Navigating the New Era: Tips for Users and Developers

As Apple Intelligence begins its phased rollout, both users and developers should adopt a forward-looking and adaptable mindset. This is not a static feature but a dynamic platform that will grow and improve over time.

For the Everyday User

- Manage Expectations: Remember that Apple Intelligence is launching in beta. There will be limitations and occasional quirks. Be patient and view it as a preview of what’s to come, rather than a finished product.

- Explore Privacy Settings: As new capabilities are added, take the time to visit the Settings app to understand how your data is being used and to customize the experience to your comfort level.

- Embrace the Learning Curve: Start experimenting with the new features as they roll out. Try asking Siri more complex questions. Use the Writing Tools to redraft an email. The more you use the system, the more you’ll discover its potential and its current boundaries.

For Developers

- Leverage New APIs: Apple is providing developers with new APIs, like the on-device Xcode Summarization tool, to integrate generative AI into their apps. Start exploring these tools now to imagine how you can build smarter, more helpful app experiences.

- Prioritize a Privacy-First Approach: When building AI features, follow Apple’s lead. Prioritize on-device processing wherever possible and be transparent with users about how their data is handled. This aligns with Apple’s ecosystem and builds user trust.

- Avoid Common Pitfalls: A common pitfall will be to offload too much processing to the cloud when an on-device model would suffice. This can introduce latency and privacy concerns. Strive to use the least amount of data and processing power necessary to accomplish a task effectively.

Conclusion

Apple Intelligence is the most significant software initiative from Cupertino in over a decade. Its arrival is not a single event but the beginning of a long-term, carefully orchestrated journey. The “beta” launch in iOS 18 is merely the foundation. The truly transformative moments will come with the subsequent iOS updates news, as Apple methodically expands language support, deepens system integration, and introduces more powerful capabilities. This phased rollout is a testament to the immense engineering challenges and Apple’s unwavering commitment to user privacy. For users, developers, and followers of the Apple ecosystem news, the message is clear: the future is coming, but it will arrive one thoughtful, secure, and intelligent update at a time.