The Silent Revolution: Apple’s Grand Strategy for Augmented Reality

In the world of technology, major shifts are often heralded by splashy product launches and bold keynote announcements. The recent discourse surrounding Apple Vision Pro news certainly fits this mold, capturing the imagination of developers and consumers alike with its promise of a spatial computing future. However, the most profound changes are often the ones that happen quietly, incrementally, and right under our noses. Apple’s true augmented reality (AR) strategy is not confined to a single, high-end device. It’s a meticulously planned, decade-long endeavor woven into the very fabric of the operating systems and devices millions use every day. This is the story of “Invisible AR”—the suite of intelligent, context-aware features within iOS that are subtly training us for the next paradigm of human-computer interaction.

While we await the full impact of dedicated hardware, the most significant Apple AR news is arguably found in the latest iOS updates news. Features that seem like simple conveniences—recognizing text in a photo, identifying a plant with your camera, or experiencing three-dimensional sound through your earbuds—are the foundational building blocks of a comprehensive AR platform. This article delves into Apple’s methodical approach, exploring how the company is leveraging its entire ecosystem, from the iPhone to the Apple Watch, to lay the groundwork for a future where the digital and physical worlds merge seamlessly. We will analyze the core technologies, examine the “sleeper” features you’re likely already using, and connect the dots to show how this grand strategy culminates in products like the Vision Pro, creating a cohesive and powerful spatial computing experience.

The Foundational Pillars: Weaving AR into the Core of iOS

Apple’s journey into augmented reality didn’t begin with a headset; it started with software frameworks and silicon designed to give developers the tools to blend digital objects with the real world. This foundational work, largely powered by ARKit and the Neural Engine, has transformed the iPhone and iPad into the world’s most advanced and widely distributed AR platforms.

ARKit: The Developer’s Gateway to Reality

Introduced in 2017, ARKit was a game-changer. It provided developers with a powerful and accessible framework for building high-quality AR experiences. Instead of needing to solve complex computer vision problems from scratch, they could leverage ARKit to handle the heavy lifting. Over the years, its capabilities have grown exponentially. Key features include:

- World Tracking: This allows an iOS device to understand its position and orientation relative to the world around it, enabling virtual objects to be “anchored” in a fixed physical location.

- Scene Understanding: ARKit can detect horizontal and vertical planes (like floors, tables, and walls), recognize images, and even identify 3D objects, making AR interactions more realistic and context-aware.

- People Occlusion and Motion Capture: The framework can understand where people are in a scene, allowing virtual objects to realistically pass behind them. It can even capture a person’s body motion in real-time to animate a virtual character, a feature with huge potential for gaming and communication.

This continuous evolution, a key part of ongoing iPhone news and iPad news, has fostered a vibrant ecosystem of AR apps, from practical measurement tools and furniture placement simulators to immersive games. More importantly, it has created a massive sandbox for Apple to learn what works in AR and for users to become comfortable with the concept.

Core ML and the Neural Engine: The Brains Behind the Magic

AR experiences that feel instantaneous and responsive require immense computational power. This is where Apple’s custom silicon, specifically the Neural Engine within its A-series and M-series chips, comes into play. The Neural Engine is optimized for machine learning (ML) tasks, allowing complex AI models to run directly on the device. This on-device processing is a cornerstone of the Apple ecosystem news and is critical for two reasons: speed and privacy.

For AR, real-time performance is non-negotiable. Any lag between your movement and the digital overlay breaks the illusion. By processing data locally, Apple avoids the latency of sending information to the cloud and back. This commitment to on-device intelligence is also central to Apple privacy news. Sensitive data from your camera and microphone is processed on your device, not on a remote server, a key differentiator in an increasingly data-conscious world. This powerful combination of hardware and software is what powers the seemingly magical “invisible AR” features that are becoming integral to the iOS experience, and it’s a topic that frequently appears in discussions about iOS security news.

“Invisible AR”: Everyday Features with a Spatial Soul

The most brilliant part of Apple’s strategy is how it has integrated fundamental AR concepts into everyday utilities, making them so useful that we often don’t even think of them as “augmented reality.” These features are conditioning us to point our cameras at the world and expect an intelligent, interactive response.

Live Text and Visual Look Up: Turning the World into an Interface

Live Text is a prime example of practical AR. By simply pointing your iPhone’s camera at a sign, menu, or document, the text becomes instantly selectable, translatable, and actionable. You can tap a phone number to call it or an address to open it in Maps. This is a foundational spatial computing behavior: treating the physical world as a user interface. Visual Look Up takes this a step further, allowing your device to identify landmarks, art, plants, and pets. These features are not just camera tricks; they are early, powerful demonstrations of how a device can understand and add a layer of digital information to the world around you. This is the essence of augmented reality, delivered in a simple, intuitive package.

The Evolution of Communication and Spatial Audio

Communication is another fertile ground for invisible AR. The built-in Translate app already offers a conversation mode that helps bridge language barriers. One can easily imagine the next step: a real-time translation feature where subtitles for a foreign speaker appear as an AR overlay, a concept that would be transformative for travel and international business. The audio component of this is already here. Spatial Audio, a highlight in recent AirPods news, AirPods Pro news, and AirPods Max news, creates a 3D soundscape where audio feels like it’s coming from all around you. This technology is not just for movies; it’s essential for creating believable AR experiences where digital sounds are convincingly placed in your physical environment. Imagine a navigation app where the voice prompt for a right turn sounds like it’s coming from the street you need to turn onto—this is the power of spatial audio in AR.

Health, Home, and Awareness

The AR strategy extends beyond the iPhone. The latest Apple Watch news often focuses on its advanced sensors. These sensors, which track motion and orientation, are perfect for use as a subtle input device or controller in an AR environment. Even the HomePod mini news points to a future where these smart speakers act as spatial anchors in a home, enabling multi-user AR experiences. The Find My network, powered by devices and AirTag news, has created a global, real-world object-tracking system—another critical piece of the puzzle for an AR platform that needs to understand the location of people and things.

The Grand Strategy: How the Ecosystem Prepares for Spatial Computing

No single Apple product exists in a vacuum. The company’s greatest strength is its tightly integrated ecosystem, and this is the ultimate trump card in its AR ambitions. Every new feature and device is another piece on the board, preparing the world for the launch of the Apple Vision Pro and beyond.

From iPhone to Vision Pro: A Seamless Transition

By embedding AR concepts into iOS, Apple is training hundreds of millions of users on the fundamental interactions of spatial computing. When these users eventually put on a Vision Pro, the core ideas will already feel familiar. The experience of looking at an object to interact with it is a natural extension of Visual Look Up. The idea of digital information overlaid on the world is an evolution of Live Text. This shared DNA ensures a much smoother learning curve and wider adoption. For developers, an app built with ARKit for the iPhone is not a dead end; it’s a direct stepping stone to creating a fully immersive version for visionOS. This seamless development pipeline is a powerful incentive for the creative community to invest in the Apple ecosystem.

The Role of Accessories and Future Inputs

The current ecosystem of accessories is also poised to play a role. The precision of the Apple Pencil, a staple of Apple Pencil news, has set a standard for digital input. It’s not a stretch to imagine this technology evolving into a more advanced spatial input device, perhaps a future Vision Pro wand or a specialized Apple Pencil Vision Pro for creative professionals. The constant stream of Vision Pro accessories news will likely reveal a range of new interaction methods. Even nostalgic thoughts of a potential iPod revival remind us of Apple’s mastery of simple, intuitive controls—a philosophy that is clearly evident in the eye-tracking and gesture-based interface of the Vision Pro. The simplicity of the iPod’s click wheel, a highlight of past iPod Classic news and iPod Nano news, demonstrates a design ethos focused on effortless interaction that persists today.

Navigating the Future: Best Practices and What’s Next

As Apple’s AR vision continues to unfold, there are actionable steps that both developers and consumers can take to prepare for and make the most of this technological shift.

For Developers: Building for the Inevitable

The message from Apple is clear: the future is spatial. Developers should not wait for a headset to become mainstream before they start thinking in 3D.

- Start Now: Begin integrating ARKit and Core ML into your applications today. Even small, utility-driven AR features can provide immense value and prepare your user base for what’s next.

- Focus on Utility: The most successful early AR apps solve real-world problems. Think less about gimmicks and more about how AR can make a task easier, faster, or more intuitive.

- Think Spatially: Start designing user interfaces and experiences that break free from the 2D screen. Consider how your app’s information and functionality could exist in a user’s physical space. An architect could use an iPad and AR to walk a client through a virtual building on an empty lot, a concept that evolves the simple iPad vision board into an interactive, immersive tool.

For Consumers: Recognizing the Shift

As a user, you can get a head start on the future by actively exploring the AR features already in your pocket.

- Be Curious: Next time you’re at a restaurant with a menu in a different language, use the camera’s translate function. Use Visual Look Up to identify a flower in your garden. These small interactions will build your “spatial literacy.”

- Embrace Spatial Audio: Experience movies and music with Spatial Audio on AirPods to understand how directional sound enhances immersion. This will be a key component of all future AR and VR experiences.

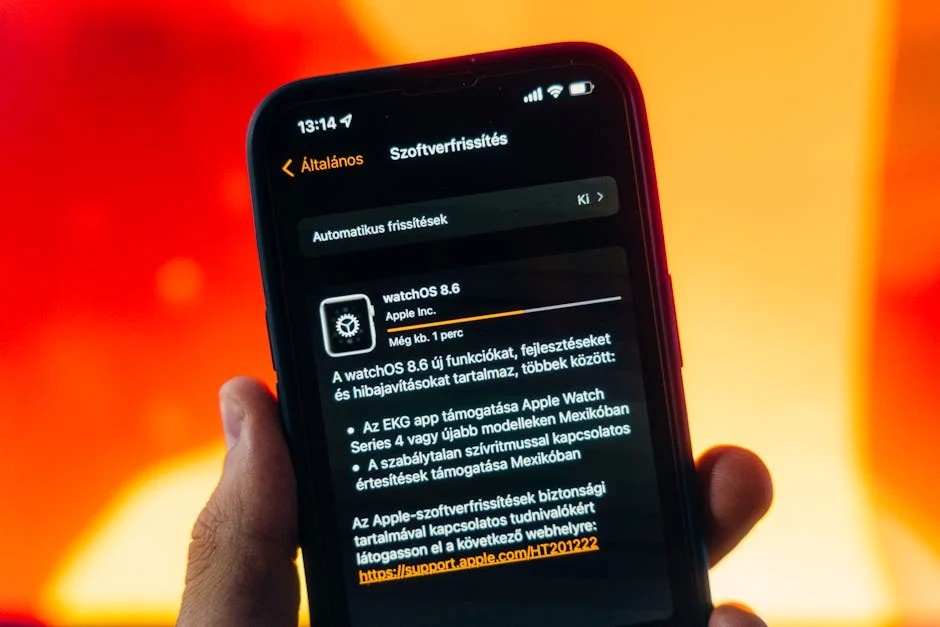

- Follow the Clues: Pay close attention to iOS updates news and the features highlighted for new iPhones. These are often the best indicators of Apple’s long-term roadmap and the next steps in its AR journey. Even topics like Apple health news may hint at future AR applications for fitness and medical data visualization.

Conclusion: The Inevitable, Augmented Future

Apple’s approach to augmented reality is a masterclass in long-term strategy. While the Vision Pro represents a monumental leap forward, the quiet, persistent integration of AR technologies into the core iOS experience is the true engine of this revolution. By building on the foundations of ARKit and the Neural Engine, Apple has transformed its existing hardware into powerful AR tools. Features like Live Text, Spatial Audio, and Visual Look Up are not mere novelties; they are carefully designed stepping stones, gradually and seamlessly guiding hundreds of millions of people toward a spatial computing future. The most important Apple AR news isn’t always about a new product announcement. It’s in the subtle software update that makes your phone just a little bit smarter, a little more aware of the world around it, and one step closer to merging our digital and physical realities forever.