The Evolution of Listening: How AirPods Are Becoming Smarter Than Ever

From their initial debut, AirPods have transcended the category of wireless earbuds to become an iconic, indispensable part of the Apple ecosystem. They are more than just an accessory for listening to music; they are a conduit for communication, a tool for focus, and increasingly, a platform for ambient computing and personal health. The latest wave of software and hardware updates continues this trajectory, introducing a suite of groundbreaking features that further blur the lines between what we hear, how we interact with our devices, and how we monitor our well-being. This isn’t just incremental improvement; it’s a fundamental shift in the role personal audio devices play in our daily lives.

This article delves into these transformative new capabilities, moving beyond the surface-level announcements to provide a comprehensive technical breakdown. We will explore the sophisticated technology powering these features, analyze their real-world applications, and offer actionable insights for users looking to maximize their experience. As we unpack these advancements, it becomes clear that the latest AirPods news is intrinsically linked to broader trends in Apple ecosystem news, from the spatial computing power of the Vision Pro to the ever-deepening integration of Apple Health. The era of passive listening is over; the age of intelligent, contextual audio has arrived.

The New Frontier: A High-Level Look at Groundbreaking AirPods Updates

The latest updates for the AirPods family, particularly the AirPods Pro and AirPods Max, introduce four pillar features that collectively represent a significant leap forward. These enhancements are powered by new silicon, advanced algorithms, and deeper integration with iOS and the broader range of Apple hardware. This wave of innovation solidifies the idea that the most exciting iPhone news or iPad news is often complemented by parallel advancements in its most popular accessory.

Adaptive Transparency Pro: Hearing What Truly Matters

Building on the foundation of Adaptive Transparency, the new “Pro” version introduces a layer of contextual intelligence. Instead of simply reducing all loud ambient sounds, it uses on-device machine learning to selectively isolate and even enhance specific audio signatures. The system can learn to prioritize a specific person’s voice in a crowded café, elevate the clarity of a train station announcement while dampening the screech of the rails, or automatically focus on your GPS navigation prompts from your iPhone while you’re driving. This feature leverages the power of the neural engine, turning your AirPods into a real-world audio filter that you can tune to your environment.

Proactive Audio Handoff: Your Sound, Anticipated

Automatic device switching has been a cornerstone of the AirPods experience, but Proactive Handoff takes it a step further. By utilizing data from Ultra Wideband (UWB) chips, accelerometers, and your usage patterns, AirPods can now anticipate which device you intend to use. For example, if you’re listening to a podcast on your iPhone and you sit down at your desk and wake your MacBook, the audio might not switch immediately. But if you open a video conferencing app, the handoff becomes instantaneous and pre-emptive. Similarly, walking into your living room might prompt your AirPods to suggest handing off the audio to your HomePod mini or Apple TV, creating a truly seamless and intelligent audio experience across the home.

Integrated Health Sensing: The Ear as a Wellness Gateway

The latest Apple health news reveals a significant expansion into new biometric data streams, and AirPods are at the forefront of this initiative. New models of AirPods Pro are now equipped with minute infrared sensors capable of taking intermittent body temperature readings from the ear canal—a location known for its accuracy. This data is securely logged in the Health app, allowing for long-term trend analysis for fever detection and cycle tracking. Furthermore, enhanced motion sensors in the AirPods Max can now provide subtle feedback on head and neck posture during long work sessions, helping to combat “tech neck” and promote better ergonomic habits. This transforms the devices from pure audio products into holistic wellness tools, complementing the data gathered by the Apple Watch.

Personalized Spatial Audio with Acoustic Mapping

Spatial Audio has already revolutionized immersive listening, but it’s now becoming deeply personal. Using the LiDAR scanner on a recent iPhone or iPad, or the advanced world-sensing cameras on the Apple Vision Pro, users can now perform a one-time “Acoustic Mapping” of their most-used rooms. By scanning the geometry and materials of a space (like your home office or living room), the system creates a custom audio profile. When you then watch a movie on your Apple TV or engage in a spatial experience on Vision Pro in that room, the AirPods adjust the audio rendering in real-time to account for the room’s unique reflective surfaces and acoustics, creating an unparalleled and hyper-realistic sense of space and presence. This is a major development in Apple AR news, grounding digital audio in the physical world.

Under the Hood: The Technology Driving the Next-Gen Audio Experience

These user-facing features are the result of significant advancements in Apple’s custom silicon, sensor technology, and software algorithms. Understanding the underlying tech provides insight into not only how these features work, but also Apple’s long-term strategy for personal computing and the central role audio will play.

The H3 Chip and Advanced Neural Processing

At the heart of these new capabilities is the speculated next-generation “H3” audio chip. This silicon boasts a significantly more powerful Neural Engine, enabling the complex, real-time audio processing required for Adaptive Transparency Pro. It can analyze and classify dozens of environmental soundscapes per second, separating speech from noise with incredible precision. This on-device processing is a cornerstone of Apple’s philosophy, ensuring that sensitive audio data from your environment is handled locally. This commitment to on-device intelligence is a recurring theme in both iOS security news and Apple privacy news, as it minimizes data transmission to the cloud and gives users greater control over their information.

Ultra Wideband (UWB) and Ecosystem Synergy

The U1 and U2 chips, once primarily associated with the precision finding of an AirTag, are now critical for ecosystem intelligence. Proactive Audio Handoff relies on UWB’s spatial awareness to determine not just which device you are near, but your precise orientation relative to it. This allows the system to differentiate between glancing at your iPad and actively engaging with it. This deep, hardware-level integration is what separates the Apple ecosystem from its competitors. It’s a symphony of devices—from the iPhone to the Apple Watch—all communicating their status and location to enable experiences that feel magical. The days of the standalone iPod Classic or iPod Shuffle are long gone; today, every device is a node in a larger, interconnected network.

Biometric Sensors and Secure HealthKit Integration

The inclusion of new biometric sensors marks a major milestone in Apple accessories news. The infrared body temperature sensor is a marvel of miniaturization, designed to function with minimal power draw. The advanced 9-axis motion sensors in the AirPods Max provide the granular data needed for accurate posture tracking. Critically, all this sensitive health data is encrypted on-device and transmitted securely to the Health app on your iPhone via Bluetooth. It is never accessible to Apple or third parties without explicit user consent, adhering to the stringent privacy standards that are a hallmark of the HealthKit platform. This secure integration is a key reason users trust Apple with their most personal data.

From Novelty to Necessity: Real-World Applications and Impact

These technological advancements are not just for show; they translate into tangible benefits that solve real-world problems and enhance daily routines across various user demographics.

The Hybrid Worker and Daily Commuter

Consider a professional working in a hybrid environment. During their morning commute on a noisy train, Adaptive Transparency Pro isolates the conductor’s announcements from the cacophony of the city. Upon arriving at a bustling open-plan office, the feature can be tuned to focus on the voices of their immediate team members while dampening the chatter from across the room. When a video call comes in on their MacBook, Proactive Handoff switches the audio from the Spotify playlist on their iPhone without them ever touching a setting. During a long afternoon of focused work, the posture reminders from their AirPods Max provide a gentle nudge to sit up straight, reducing physical strain.

The Home Entertainment and Spatial Computing Enthusiast

For those invested in home entertainment, the impact is profound. After performing an Acoustic Mapping of their living room, watching a blockbuster film on Apple TV with AirPods Max becomes a bespoke cinematic experience. The sound of a starship flying overhead feels like it’s truly reflecting off their own ceiling. This technology serves as a perfect audio companion to the visual immersion of the Apple Vision Pro. As a user interacts with spatial apps, the audio dynamically adapts to the mapped room, creating a seamless blend of digital and physical reality. This synergy, often hinted at in Apple TV marketing news, is now a practical reality, making the home the ultimate entertainment venue.

The Health-Conscious User and the Future of Wellness

The integration of health features transforms AirPods from a listening device into a passive wellness monitor. A user might not notice a low-grade fever, but their AirPods will, logging the data in the Health app and potentially providing an early warning of illness. This data, combined with metrics from an Apple Watch, creates a more holistic picture of one’s health. This aligns with the latest Siri news, where the assistant is becoming more proactive in surfacing health insights. The potential for future development is immense, from detecting changes in breathing patterns to monitoring for signs of balance issues using the motion sensors.

Maximizing Your Experience: Tips, Tricks, and Considerations

To get the most out of these powerful new features, users should be mindful of setup, customization, and the nuances of the technology. A little configuration can go a long way in tailoring the experience to your specific needs.

Best Practices for Setup

- Calibrate for Your Spaces: Take the time to perform the Acoustic Mapping in your two or three most-used locations, like your office and living room. The difference in audio quality is noticeable.

- Train the AI: For Adaptive Transparency Pro, actively use the feature. The more you use it in different environments, the better the on-device models become at predicting which sounds you want to prioritize.

- Customize in Control Center: Don’t stick with the defaults. Long-press the volume slider in Control Center to access detailed AirPods settings. Here, you can fine-tune the sensitivity of the transparency and noise cancellation modes on the fly.

Common Pitfalls to Avoid

- Ignoring Privacy Pop-ups: When setting up the new health features, you will be asked for permissions to write data to the Health app. Read these carefully and understand what you are sharing. Review your settings periodically in the Health app’s “Sources” tab.

- Expecting Instant Perfection: Proactive Handoff is a learning system. In the first few days, it might make a few incorrect guesses. Be patient and continue using your devices as you normally would; the predictive accuracy will improve over time.

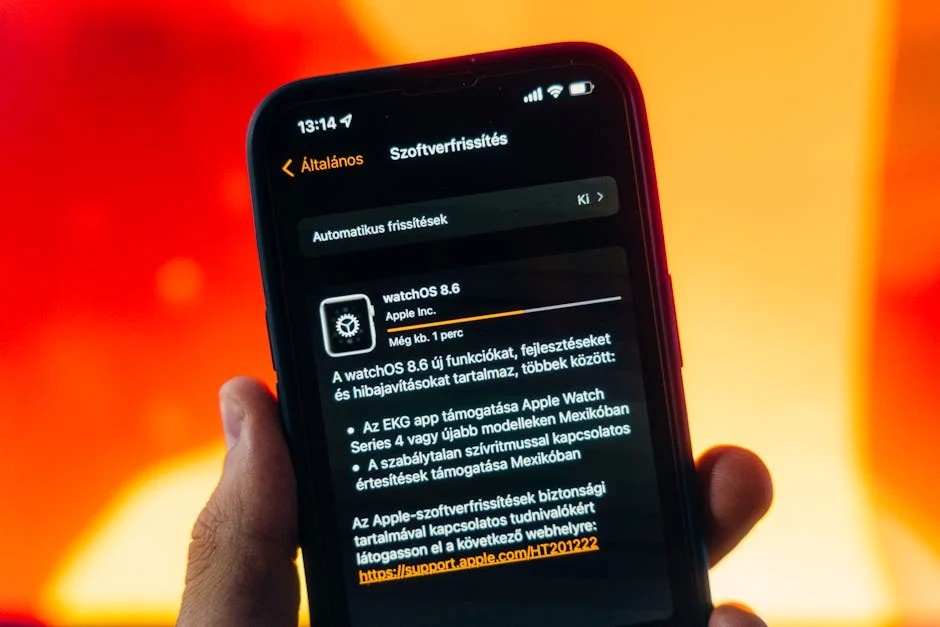

- Forgetting Firmware Updates: These intelligent features are constantly being refined. Ensure your AirPods firmware is kept up-to-date alongside the latest iOS updates news to benefit from ongoing performance and accuracy improvements.

Is It Time to Upgrade?

For owners of older AirPods models, the question of upgrading is compelling. If you are a power user deeply embedded in the Apple ecosystem, a tech enthusiast, or someone who relies on AirPods for focus in noisy environments, the upgrade to a model with these new features is almost a necessity. The quality-of-life improvements are substantial. For casual listeners who primarily use their AirPods for music and calls, older models like the AirPods 2 or the first-generation AirPods Pro remain excellent devices. However, the introduction of health monitoring may be the tipping point for many, offering a value proposition that extends far beyond audio quality.

Conclusion: The Future of Audio is Personal and Proactive

The latest advancements in the AirPods lineup are a powerful statement about Apple’s vision for the future of personal computing. By infusing a simple pair of earbuds with contextual intelligence, proactive assistance, and meaningful health insights, Apple has transformed them into an indispensable life companion. Features like Adaptive Transparency Pro and Proactive Handoff are not just conveniences; they are foundational elements of a more intuitive and seamless interaction with technology. The integration of health sensors further solidifies the device’s role as a key player in Apple’s ambitious wellness ecosystem.

As we look ahead, it’s clear that this is just the beginning. The journey from the simple wired earbuds of the first iPod to today’s intelligent audio platform has been remarkable. The AirPods are no longer just an accessory to the iPhone; they are a critical endpoint for AR, a sensor for health, and a smart filter for the world around us. The future of AirPods news will likely be intertwined with developments for the Apple Vision Pro and the continued evolution of on-device AI, further cementing their place as one of Apple’s most important and personal products.