In the ever-evolving landscape of consumer technology, Apple’s strategic maneuvers often signal seismic shifts that ripple across the entire industry. Recent whispers from the supply chain and insider reports suggest a significant recalibration in Apple’s augmented reality (AR) ambitions. The narrative is shifting from the high-end, immersive “spatial computing” of the Apple Vision Pro towards a more accessible, all-day wearable: smart glasses. This potential pivot is far more than a simple product line adjustment; it’s a profound statement about the future of personal computing. More importantly, it places an unassuming hero at the center of this new universe: the Apple Watch. This article delves into this strategic realignment, exploring how the deprioritization of a next-generation Vision Pro in favor of mainstream AR glasses will fundamentally transform the role of the Apple Watch, impact the entire product ecosystem, and set the stage for Apple’s next decade of innovation.

Understanding Apple’s Strategic Recalibration in Spatial Computing

To grasp the significance of this shift, one must first understand the two distinct philosophies it represents. The initial launch of the Vision Pro was a landmark moment in Apple Vision Pro news, introducing the world to a powerful, self-contained “spatial computer.” It was a technological marvel designed for deep immersion, a destination device for work, entertainment, and communication. However, its high price point and form factor inherently limited its market to prosumers and early adopters. This is where the strategic pivot comes into focus, signaling a move from niche power to mainstream presence.

From “Spatial Computing” to “Ambient Intelligence”

The new direction, as suggested by the latest Apple AR news, is toward what can be described as “ambient intelligence.” Instead of a device you consciously decide to use for specific tasks, the goal is a technology that seamlessly integrates into your daily life. Lightweight, stylish AR glasses would serve as a display layer for the digital world, overlaying contextual information onto your physical surroundings. This strategy is reminiscent of Apple’s historical product evolution. The company has always excelled at taking complex technology and making it personal and ubiquitous. We saw this in the transition from the niche Macintosh to the mainstream iPod, a device that changed music forever and generated a wave of iPod news. The subsequent evolution through the iPod Nano news, iPod Shuffle news, and eventually the revolutionary iPhone news cemented this pattern. This pivot from a complex headset to simple glasses follows the same playbook: democratizing a new form of computing for the masses.

Why the Pivot? Market Realities and Long-Term Vision

Several factors are likely driving this strategic recalibration. The high manufacturing cost and slow consumer adoption of the initial Vision Pro are undeniable market realities. Furthermore, competitors are focusing on more accessible form factors. Apple’s long-term vision has never been about winning a niche market; it’s about defining the next paradigm of personal technology. Achieving this requires a device that is not only powerful but also socially acceptable, comfortable for all-day wear, and seamlessly integrated with the user’s life. The original iPod Classic and iPod Mini were successful because they were portable and simple; a potential iPod revival news story would likely focus on these same core tenets. The AR glasses represent a similar pursuit of elegant simplicity, a stark contrast to the initial, more cumbersome spatial computer. This shift requires a re-evaluation of the entire Apple ecosystem news, forcing each device to find its new, optimized role in a world of ambient intelligence.

Redefining the Role of the Apple Watch in an AI-Powered Future

In this new AR-centric ecosystem, the Apple Watch is poised to graduate from a health-focused accessory to the central nervous system of your personal AI experience. Its position on the wrist, packed with sensors and providing a direct haptic link to the user, makes it the ideal conductor for an orchestra of connected devices.

Beyond Health: The Watch as a Control Center

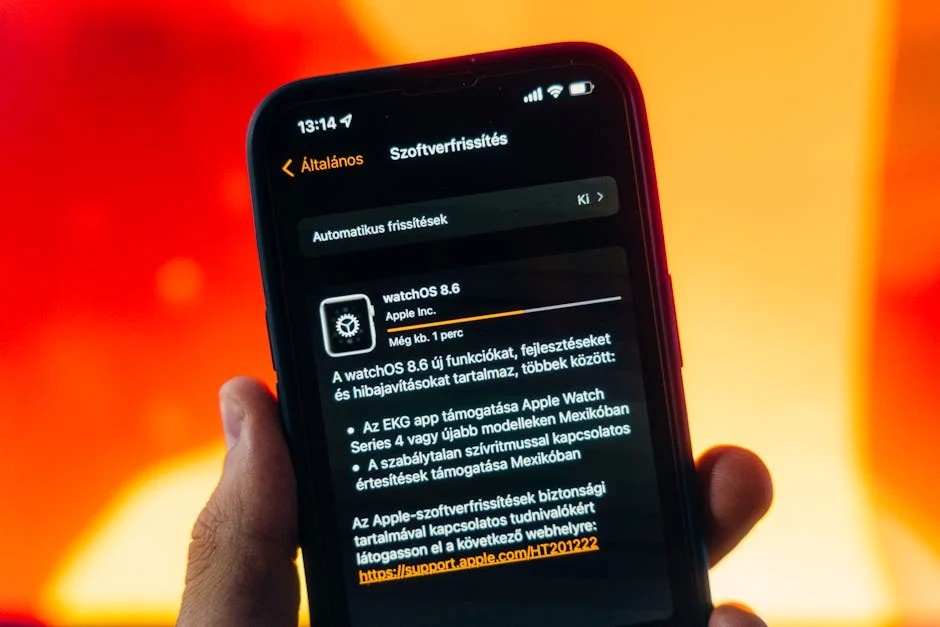

While the latest Apple health news continues to highlight groundbreaking features like blood oxygen monitoring and ECG, the future role of the device is far more expansive. Imagine the AR glasses as the screen and the iPhone as the processor. The Apple Watch becomes the primary input and interaction device. Instead of relying on hand gestures that could be socially awkward, or a separate accessory like a hypothetical Vision Pro wand news might suggest, users could interact with the AR world through subtle taps on the watch face, twists of the Digital Crown, or even micro-gestures detected by its motion sensors. This creates a discreet, intuitive, and powerful control scheme. Furthermore, the latest Siri news points towards a more capable, on-device AI assistant. A command whispered to your Watch could instantly bring up relevant information on your glasses, creating a fluid and responsive user experience without ever needing to pull out your phone.

Sensor Fusion and Contextual Awareness

The true power of this new paradigm lies in “sensor fusion.” The Apple Watch’s array of sensors—accelerometer, gyroscope, heart rate, blood oxygen—provides a rich stream of data about the user’s state and context. When combined with the visual data from the AR glasses and the processing power of the iPhone, Apple can create a deeply personalized and context-aware experience.

Real-World Scenario: You are walking down the street, and your heart rate elevates slightly as you approach a coffee shop. The Watch detects this physiological cue. Simultaneously, the AR glasses identify the shop. The system, powered by AI, infers your potential interest. A subtle haptic buzz on your wrist from the Watch alerts you, and the glasses display your usual coffee order and the current wait time. This level of integration transforms technology from a tool you command into a partner that anticipates your needs. Of course, handling this sensitive data requires ironclad security, a cornerstone of Apple privacy news and a continuous focus in all iOS security news.

A Cohesive Vision: How the AR Pivot Impacts the Entire Product Line

This strategic shift doesn’t happen in a vacuum. It necessitates a tightening of the already impressive integration across Apple’s entire product lineup, with each device playing a specialized, crucial role in delivering the final experience.

The iPhone and iPad: The Brains of the Operation

The iPhone will almost certainly serve as the primary processing engine for the first generations of AR glasses. The latest iOS updates news will likely include frameworks specifically designed to manage the data flow between the iPhone, Watch, and glasses efficiently. Offloading the heavy computational tasks to the phone allows the glasses to remain lightweight, cool, and energy-efficient. The iPad’s role will also evolve. The latest iPad news often focuses on its creative and professional capabilities. In an AR world, the iPad, especially when paired with an Apple Pencil, could become the ultimate creation tool for spatial content. An architect could sketch a 3D model on their iPad and instantly see it projected at full scale in the room through their glasses. This synergy makes concepts like a dynamic iPad vision board news feed a tangible reality, where digital plans and ideas can be visualized in physical space.

AirPods and Audio’s Critical Role

Audio is a critical, yet often underestimated, component of immersive AR. The latest AirPods news, particularly surrounding the AirPods Pro and AirPods Max, has heavily focused on Spatial Audio. In an AR environment, this technology becomes indispensable. Directional audio cues can guide your attention to a notification in your peripheral vision or make a virtual character sound like they are actually in the room with you. Low-latency, high-fidelity audio from AirPods, visual overlays from the glasses, and haptic feedback from the Watch will combine to create a truly multi-sensory computing experience that is more immersive and intuitive than anything possible today.

The Extended Family: HomePod, Apple TV, and AirTag

The ambient intelligence layer will extend into the home. Looking at your HomePod mini could display playback controls in your field of view. The future of Apple TV news might involve AR content that breaks the boundaries of the screen, projecting interactive elements into your living room during a movie or sports game. Even the humble AirTag gets a major upgrade. The latest AirTag news could shift from simply locating items to enabling persistent AR labels. You could “attach” a digital note to an object via an AirTag, visible to anyone in your family wearing the AR glasses, creating a new layer of communication and organization within the home. This deep integration across all Apple accessories news is what will make the ecosystem truly compelling.

Navigating the Future: Best Practices and Potential Pitfalls

This forward-looking vision presents both immense opportunities and significant challenges for developers and consumers alike. Navigating this new landscape requires a shift in thinking about how we design and interact with technology.

For Developers: A New Frontier

Developers should prepare for a paradigm shift away from deep-engagement, screen-based apps towards what might be called “glanceable intelligence.” The key will be delivering the right information at the right time, in the most unobtrusive way possible.

Best Practices:

- Focus on Context: Build apps that leverage sensor fusion to understand the user’s context and provide proactive, relevant information.

- Design for Subtlety: UI/UX design will need to be minimal and non-distracting. Think subtle notifications and simple, gesture-based controls initiated from the Apple Watch.

- Embrace the Ecosystem: Develop experiences that intelligently span across the iPhone, Apple Watch, and AR glasses, using each device for what it does best.

For Consumers: The Pros and Cons

For users, the primary benefit is a more seamless and integrated technological experience. The promise is a world where technology assists you without demanding your full attention. However, there are valid concerns. The “always-on” nature of AR glasses raises profound questions about privacy and social etiquette. Apple’s strong historical stance on user privacy will be tested like never before. Consumers will need to be vigilant and educated about the data they are sharing, while Apple will need to be transparent and provide robust controls, making Apple privacy news more critical than ever.

Conclusion with Final Thoughts

The rumored strategic pivot from a niche, high-end spatial computer to mainstream, all-day AR glasses is not a sign of failure but a classic Apple move towards mass-market adoption. This recalibration reimagines the entire Apple ecosystem, transforming it into a cohesive, multi-sensory platform for ambient intelligence. At the heart of this new world is the Apple Watch, evolving from a health and fitness tracker into the primary controller and sensory input for our digital lives. This shift leverages the strengths of every device—the processing power of the iPhone, the creative canvas of the iPad, and the immersive audio of AirPods—to create something greater than the sum of its parts. As we watch the next wave of Apple Watch news unfold, it’s clear we are not just looking at the future of a single product, but the blueprint for the next generation of personal computing.