The Dawn of a New Creative Paradigm: Integrating Apple Pencil with Vision Pro

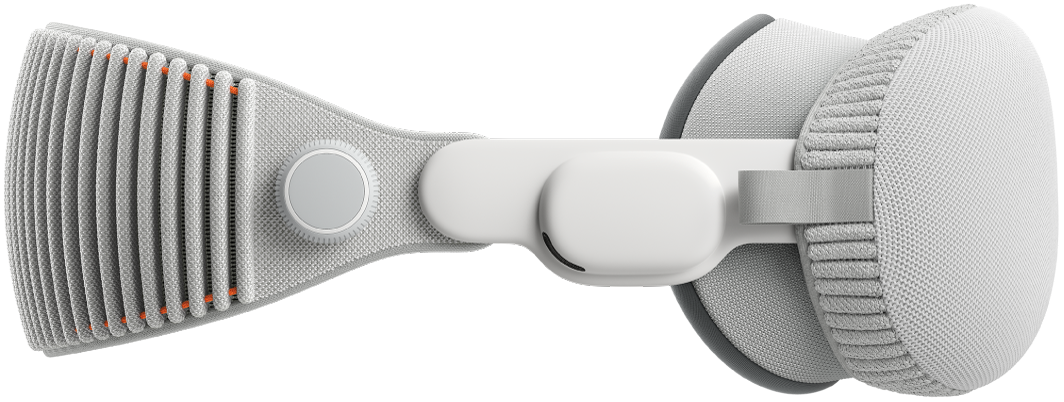

Apple Vision Pro has redefined the boundaries of personal computing, introducing a world where digital content seamlessly merges with our physical space. Its revolutionary eye and hand-tracking system has set a new standard for intuitive navigation in a spatial environment. However, for many creative and technical professionals, the quest for ultimate precision remains. While gestures are perfect for selection and navigation, tasks demanding fine motor skills—like digital sculpting, detailed illustration, or surgical planning—call for a tool with tactile feedback and unparalleled accuracy. This is where the conversation turns to a powerful potential enhancement in the latest Apple Vision Pro news: the integration of the Apple Pencil.

The prospect of pairing the Apple Pencil with Vision Pro is more than just an accessory update; it represents a fundamental shift in how we interact with spatial computing. It signals a move from consumption and navigation towards deep, professional-grade creation. This article explores the technical underpinnings, transformative real-world applications, and profound ecosystem implications of bringing Apple’s celebrated stylus into the third dimension. We will dissect what this means for artists, designers, engineers, and the entire Apple ecosystem, moving beyond the current headlines to provide a comprehensive analysis of this game-changing development.

The Rationale Behind Integrating Apple Pencil with Vision Pro

To understand the significance of this potential integration, we must first analyze the current input methods for Vision Pro and identify the specific gap the Apple Pencil is uniquely positioned to fill. The current system is a marvel of engineering, but the introduction of a precision tool would unlock a new echelon of professional applications.

The Current State of Vision Pro Input: Strengths and Limitations

The core of the visionOS experience is its hands-free input system. By tracking a user’s eyes for targeting and recognizing subtle hand gestures like a pinch for selection, Apple has created a system that feels magical and intuitive for navigating menus, browsing the web, and positioning windows. It’s a testament to Apple’s human interface design philosophy. However, this system has inherent limitations for high-precision tasks. Holding a hand steady in mid-air to draw a perfectly straight line or manipulate a tiny vertex on a 3D model can be ergonomically challenging and lack the pixel-perfect accuracy that professionals rely on. This is where the latest Apple Pencil news becomes so compelling, suggesting a solution is on the horizon.

Why the Apple Pencil is the Perfect Spatial Companion

The Apple Pencil is not just a stylus; it’s a mature, professional-grade tool honed over several generations on the iPad. Its reputation is built on key features that would translate powerfully into a spatial environment:

- Sub-pixel Precision: The ability to touch a specific point on a virtual canvas or model with pinpoint accuracy.

- Low Latency: The near-instantaneous response between the physical movement of the Pencil and the digital mark it creates is crucial for a natural feel.

- Pressure and Tilt Sensitivity: For artists, these features are non-negotiable, allowing for varied line weights and shading techniques that bring digital art to life.

Unlike a generic controller, which some early Vision Pro wand news speculated about, the Pencil is a familiar tool for millions of creatives. Its integration would provide a bridge for iPad power users, making the Vision Pro an even more attractive proposition and strengthening the overall Apple ecosystem news narrative.

Technical Hurdles and Potential Solutions

Bringing the Pencil into a 3D space presents a significant technical challenge: tracking. How does visionOS know the precise position and orientation of the Pencil in 3D space? Several possibilities exist. The Vision Pro’s external cameras could visually track the Pencil, perhaps a new model with specific markers. Alternatively, a future Apple Pencil could incorporate an Inertial Measurement Unit (IMU) and a Ultra Wideband (UWB) chip, similar to the technology found in AirTags, allowing the Vision Pro to triangulate its position with extreme accuracy. This potential hardware update would be major Apple accessories news, possibly coinciding with future visionOS updates news that enable the functionality. The evolution of input is a core part of Apple’s story, a journey from the iconic click wheel that dominated iPod Classic news for years to the multi-touch revolution of the iPhone.

Redefining Creativity and Productivity in visionOS

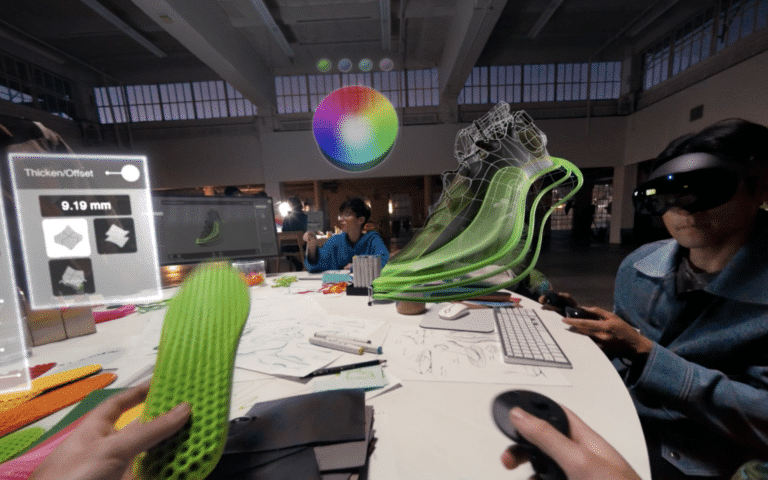

The integration of Apple Pencil with Vision Pro would not be an incremental update; it would be a catalyst for entirely new workflows across a multitude of industries. By providing a tool for high-fidelity interaction, Apple would transform the device from a spatial viewer into a spatial creator’s studio.

For the Digital Artist and Designer

Imagine a 3D artist sculpting a character model as if it were a physical clay bust floating in their studio. With the Apple Pencil, they could make precise, nuanced adjustments, using pressure sensitivity to push, pull, and smooth surfaces with a level of control that a mouse or hand gestures could never replicate. Graphic designers could create elaborate 3D typography and logos, walking around their creation to view it from every angle. This workflow could make apps like Freeform evolve into powerful collaborative tools, going beyond the scope of current iPad vision board news to create truly immersive, interactive 3D mood boards and brainstorming spaces.

For the Professional and Enterprise User

The applications in technical fields are equally profound. An architect could use the Pencil to perform virtual site inspections, annotating a 3D model of a building with notes and measurements directly onto the virtual structure. In the medical field, this technology could be transformative. A surgeon could practice a complex procedure on a hyper-realistic 3D anatomical model, using the Pencil as a virtual scalpel. This level of precision and realism has significant implications for training and planning, representing a major leap forward for Apple health news. Engineers could collaborate on complex CAD models of machinery, disassembling and marking up components in a shared virtual space, streamlining the design and review process.

Everyday Productivity and Education

Beyond highly specialized fields, the Pencil would enhance everyday productivity. Imagine taking handwritten notes on a virtual notepad that hovers beside your Mac Virtual Display, or participating in a virtual meeting where you can use the Pencil to sketch ideas on a shared, infinite whiteboard. In education, students could dissect a virtual frog or explore the solar system, using the Pencil to interact with and query objects in a way that traditional textbooks cannot match. This level of interactivity would make learning more engaging and effective, solidifying the Vision Pro’s role as a next-generation educational tool.

Beyond a Stylus: The Pencil as a Key to the Apple Ecosystem

The true power of this integration lies not just in the Pencil’s capabilities, but in how it deepens the connections within Apple’s tightly woven ecosystem. It acts as a bridge, making the entire platform more cohesive and powerful, reinforcing Apple’s commitment to user privacy and security along the way.

Bridging the Gap Between iPad, Mac, and Vision Pro

Currently, the iPad news cycle is often dominated by its role as a creative powerhouse, largely thanks to the Apple Pencil. Bringing this functionality to Vision Pro creates a seamless creative continuum. An artist could start a sketch on their iPad Pro and then bring that 2D drawing into the Vision Pro as a foundational layer for a 3D model, using the same Pencil to add depth and form. This could also revolutionize the Mac Virtual Display feature. Instead of just viewing your Mac screen, you could potentially use the Pencil to directly interact with macOS apps like Photoshop or Final Cut Pro, effectively turning them into spatially-aware creative suites. This interoperability is the hallmark of the Apple ecosystem, a concept that has evolved dramatically since the days of syncing your library in the era of iPod news.

The Hardware Question: A New Pencil or a Software Update?

A key question is whether this functionality will come via a software update for existing Pencils (like the Apple Pencil 2 or USB-C model) or require new hardware. While a software-only solution would be great for current owners, the technical demands of 6DoF (six degrees of freedom) tracking in 3D space might necessitate a new “Apple Pencil Pro.” This new device could feature advanced sensors like an IMU, UWB, and maybe even haptic feedback to simulate the feeling of touching a virtual surface. Such a launch would be significant Vision Pro accessories news, potentially creating a new top-tier accessory for pro users. This continuous hardware and software evolution is a constant in Apple’s playbook, a far cry from the static product lines of the iPod Nano news or iPod Shuffle news days.

Privacy and Security First

With any new input device that tracks user movement, privacy is paramount. In line with the latest Apple privacy news and the robust security frameworks seen in iOS, we can expect Apple to handle the Pencil’s spatial data with the utmost care. All processing for tracking would likely happen on-device, ensuring that precise movement data never leaves the Vision Pro. This commitment to security is a core tenet of the Apple brand, and it would be a critical aspect of implementing this new technology, ensuring user trust in a way that echoes the secure foundation of iOS security news.

Navigating the New Paradigm: Tips and Considerations

As this technology moves from rumor to reality, both developers and users will need to adapt to new interaction models. Understanding the potential and preparing for the shift will be key to harnessing the full power of a spatially-aware Apple Pencil.

For Developers: A New Dimension of Interaction

Developers in the creative, design, and engineering spaces should begin conceptualizing how precision input could transform their visionOS apps. The introduction of the Pencil is a call to move beyond simple gesture-based interfaces. They should consider:

- Hybrid Input Models: How can hand gestures and Pencil input coexist? Gestures could be for broad actions like moving a canvas, while the Pencil is used for detailed work on it.

- 3D User Interface Elements: Designing tool palettes and menus that are easily accessible with a Pencil in a 3D space will be a new UI/UX challenge.

- API Potential: A “PencilKit for visionOS” would likely provide developers with rich data on pressure, tilt, and precise location, opening the door for incredibly innovative applications.

For Users: Ergonomics and Learning Curve

Users will also face a period of adaptation. The ergonomics of drawing in mid-air are different from drawing on a solid iPad screen. We may see the emergence of new best practices, such as using a physical desk as a stabilizing surface for one’s arm to reduce fatigue during long creative sessions. There will be a learning curve in mastering the control of a tool in 3D space, but for creative professionals, the payoff in expressive freedom will be well worth the effort. This is the kind of user-focused evolution we see across the product line, from Apple Watch news about new health sensors to AirPods Pro news on adaptive audio.

The Competitive Landscape

While other VR platforms have controllers that can act as pointers, none have a tool with the creative pedigree and widespread adoption of the Apple Pencil. Meta’s Quest controllers are primarily designed for gaming, lacking the pressure and tilt sensitivity crucial for professional art. By leveraging the existing Pencil ecosystem and its dedicated user base, Apple can immediately differentiate the Vision Pro as the premier spatial device for serious creative and professional work, setting a new benchmark in the burgeoning field of Apple AR news.

Conclusion: A New Chapter in Spatial Creation

The potential integration of the Apple Pencil with Apple Vision Pro is far more than a simple accessory pairing; it’s a statement of intent. It signals Apple’s ambition to make spatial computing an indispensable tool for the world’s most demanding creators and professionals. By bridging the gap between the intuitive, gestural navigation of visionOS and the tactile precision of the Pencil, Apple is poised to unlock a new dimension of creativity and productivity. This move would transform the Vision Pro from a revolutionary content consumption device into a paradigm-shifting content creation platform.

For artists, designers, engineers, and surgeons, it promises workflows with unprecedented immersion and control. For the Apple ecosystem, it represents a deeper, more powerful integration between its flagship devices. As we look forward to future visionOS updates news, the arrival of the Apple Pencil in the third dimension may well be remembered as the moment spatial computing truly came of age, fulfilling its promise to become the next great canvas for human ingenuity.