The Dawn of a New Input Paradigm: Why Vision Pro Needs a Precision Tool

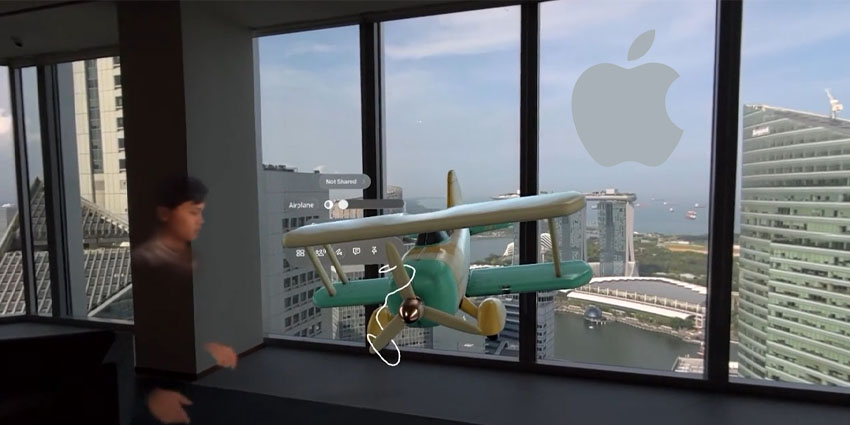

With the launch of the Apple Vision Pro, Apple didn’t just introduce a new product; it unveiled a new era of computing. The core of this experience is its revolutionary input system, a seamless blend of eye-tracking and hand gestures that feels less like operating a computer and more like interacting with the world itself. For navigation, media consumption, and general use, it’s a magical and intuitive solution. However, as the initial wave of Apple Vision Pro news settles, a critical question emerges for power users, creators, and professionals: is “magical” enough for tasks that demand absolute precision?

The very nature of professional-grade work in fields like 3D design, digital art, and intricate technical planning requires a level of granular control that hand gestures, however well-tracked, may struggle to provide. This is where the potential for a new class of Vision Pro accessories news becomes not just exciting, but necessary. Recent patent filings and technological speculation point towards a groundbreaking solution: a purpose-built Apple Pencil for the Vision Pro. This isn’t just about bringing a stylus into a 3D world; it’s about creating a true spatial instrument, a “Vision Pro wand” that could bridge the gap between intuitive navigation and professional precision, fundamentally changing how we create and interact in mixed reality.

From Click Wheel to Spatial Gestures: The Evolution of Apple’s Input Philosophy

To understand the significance of a potential Apple Pencil for Vision Pro, it’s essential to look at Apple’s long and deliberate history of evolving user input. Each major product line has been defined by its unique method of interaction, a journey that provides crucial context for what might come next in the world of spatial computing.

The iPod and the Dawn of Digital Navigation

Long before the touch screen dominated our lives, Apple solved the challenge of navigating vast digital libraries with an elegant, physical interface: the click wheel. This innovation, central to the latest iPod Classic news of its time, was a masterclass in tactile feedback and simplicity. It allowed users to scroll through thousands of songs with precision and speed. The entire iPod family, from the original to the models discussed in nostalgic iPod Nano news and iPod Shuffle news, was built around this satisfying and efficient input method. Even today, discussions around a potential iPod revival news often center on the beloved physicality of that interface, a testament to how a well-designed input can define a product’s legacy.

The Touch Revolution: iPhone and iPad

The iPhone’s launch in 2007 marked a seismic shift. Apple famously rejected the stylus-driven PDAs of the era, opting for the most natural pointing device we have: the finger. This multi-touch interface, which has been the core of iPhone news and iOS updates news for over a decade, redefined personal computing. However, as the iPad grew into a powerful tool for artists and designers, the need for a more precise instrument became clear. The Apple Pencil was born not as a replacement for touch, but as a powerful enhancement. The latest iPad news consistently highlights how the Pencil has transformed the tablet into an indispensable tool for creatives, proving that for certain tasks, a dedicated precision instrument is superior.

The Current State: Vision Pro’s Hand and Eye Tracking

The Apple Vision Pro represents the next leap, abstracting input away from a physical surface entirely. The system, which combines foveated rendering with sophisticated hand tracking, is a marvel of engineering. It makes the digital world feel tangible and responsive. Yet, it introduces new challenges. “Midas touch” issues, where the system misinterprets a glance as a command, and the lack of haptic feedback can make precise object manipulation difficult. For a surgeon planning a procedure or an architect examining a 3D model, the absence of a firm, tactile input device is a significant barrier to adoption. This is the gap a spatial Apple Pencil is poised to fill.

Under the Hood: The Technology Behind a Spatial Pencil

An Apple Pencil for Vision Pro would be a far more complex device than its iPad counterpart. It would need to provide not just a point of contact, but full six-degrees-of-freedom (6DoF) tracking in 3D space, along with sophisticated feedback mechanisms. This requires a fusion of cutting-edge hardware and deep software integration within the Apple ecosystem news.

Beyond Bluetooth: Advanced Motion and Position Tracking

The current Apple Pencil communicates its position and pressure relative to the iPad’s screen. A spatial version would need to know its own absolute position and orientation in the room. Several technologies could make this possible:

- Inertial Measurement Units (IMUs): A combination of accelerometers and gyroscopes, similar to those in an iPhone or Apple Watch, would track the Pencil’s orientation and movement. This is a core component for any motion-tracked controller.

- Optical Tracking: The Pencil could be embedded with infrared LEDs or a specific visual pattern that the Vision Pro’s external cameras can track. This “inside-out” tracking method would allow the headset to know exactly where the Pencil is in its field of view.

- Ultra-Wideband (UWB): Leveraging the U1 chip, the same technology that powers the precision finding feature in AirTags, the Vision Pro could triangulate the Pencil’s exact location in a room with centimeter-level accuracy. The latest AirTag news demonstrates Apple’s growing investment in this powerful location technology.

Combining these systems would create a robust and low-latency tracking solution, ensuring that the virtual representation of the Pencil moves in perfect sync with the user’s hand.

Haptic Feedback and Dynamic Controls

A key limitation of the current hand-tracking system is the lack of feedback. You can “air tap” a virtual button, but you don’t feel anything. A spatial Pencil could incorporate an advanced haptic engine, similar to the Taptic Engine in the iPhone and Apple Watch. This would provide subtle vibrations, clicks, and textures, allowing a user to “feel” the surface of a virtual object or receive confirmation when an action is completed. Furthermore, the device could include physical buttons, a scroll wheel, or pressure-sensitive surfaces, turning it into a multi-functional Vision Pro wand that adapts to the task at hand. This level of feedback is critical for making spatial interactions feel grounded and real.

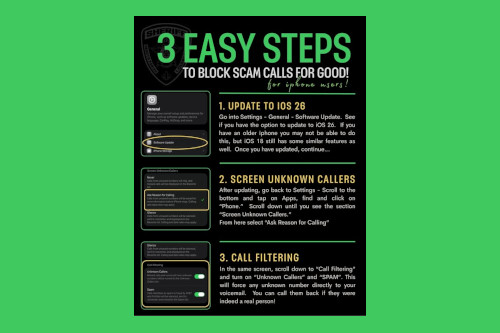

Seamless Integration, Privacy, and Security

For this to work, deep integration with visionOS is paramount. The system would need to seamlessly arbitrate between eye-tracking, hand gestures, and Pencil input. For example, a user might look at an object to select it (eye-tracking), and then use the Pencil to manipulate it with precision. This requires immense processing power and presents new challenges for Apple privacy news. The precise tracking of a user’s hand movements in 3D space is sensitive data. Apple would need to extend its industry-leading commitment to iOS security news to visionOS, ensuring all this tracking data is processed on-device and never shared without explicit user consent.

Practical Magic: Real-World Applications for a Vision Pro Pencil

The introduction of a precision input tool would unlock a vast array of professional and creative applications, transforming the Vision Pro from a consumption device into a powerful creation station. The impact would be felt across numerous industries.

For the Creative Professional: 3D Modeling and Digital Art

Imagine a digital sculptor working on a 3D model. Instead of manipulating vertices with a mouse on a 2D screen, they can now reach into the virtual space and sculpt the clay directly with the spatial Pencil. The pressure sensitivity could control the depth of the tool, while haptic feedback would simulate the resistance of the material. An illustrator could walk around their creation, adding details from any angle. This is the kind of workflow that could revolutionize animation, game design, and industrial design, turning abstract concepts from an iPad vision board news entry into a tangible 3D object right before your eyes.

For Enterprise and Medicine: Surgical Simulation and Design Review

In the medical field, the applications are profound. A surgeon could use the Vision Pro and a spatial Pencil to practice a complex operation on a hyper-realistic 3D anatomical model. The haptic feedback could simulate the feel of different tissues, providing an unparalleled training experience. This ties directly into the growing landscape of Apple health news. Similarly, architects and engineers could conduct design reviews by walking through a full-scale virtual model of a building. The patent itself describes using the device to “peer around” a virtual object, allowing an engineer to physically move their head and the Pencil to inspect a 3D CAD model for interferences or design flaws in a way that is simply impossible on a flat screen.

Enhancing Productivity and Entertainment

Beyond highly specialized fields, a Vision Pro Pencil would enhance everyday tasks. It could serve as a high-precision laser pointer for presentations in a virtual conference room, or a fine-tipped brush for editing spatial photos and videos. In gaming, it could become a magic wand, a sword, or a surgical tool, offering a level of immersion and control that hand gestures alone cannot match. As Vision Pro becomes a central hub for entertainment, perhaps even influencing future Apple TV marketing news, such an accessory could become the default controller for a new generation of spatial games.

The Bigger Picture: A New Pillar in the Apple Ecosystem

The development of a spatial Apple Pencil is more than just an accessory; it’s a statement about Apple’s long-term vision for spatial computing. It signals a commitment to building a platform that is not only for consumption but for serious, professional creation.

Synergy with Other Apple Devices and Accessories

This new device would exist within Apple’s famously interconnected ecosystem. Imagine receiving a notification from your Apple Watch news feed that appears in your peripheral vision, which you can then interact with using the Pencil. The spatial audio from your AirPods Pro news could provide directional cues that complement the Pencil’s haptic feedback, creating a multi-sensory experience. You could even potentially use the Pencil to control your HomePod mini or other smart home devices represented as virtual objects in your living room. This deep integration is what sets the Apple ecosystem news apart and would make the Vision Pro platform even more compelling.

Challenges and Recommendations

Of course, there are challenges. The cost of such a sophisticated accessory would likely be high, potentially limiting its initial audience. Battery life will also be a critical factor for a device with active tracking and haptics. From a developer’s perspective, the key will be to embrace the Pencil as a powerful enhancement rather than a requirement. The best practices will involve designing apps that are fully functional with hand-and-eye tracking but gain significant new capabilities when a Pencil is present, avoiding fragmentation of the user base.

Conclusion: Charting the Future of Spatial Interaction

The journey of Apple’s input methods—from the iPod’s click wheel to the iPhone’s multi-touch and now the Vision Pro’s spatial gestures—has always been about making technology more direct, intuitive, and powerful. While the current hand-and-eye tracking system on the Vision Pro is a remarkable achievement, it represents a starting point, not the final destination. The potential arrival of an Apple Pencil for Vision Pro, a true “Vision Pro wand,” is the logical next step in this evolution.

By providing the tactile feedback and pixel-perfect precision that professionals crave, such a device would unlock the Vision Pro’s true potential as a creation tool. It would transform industries, empower artists and designers, and solidify Apple’s platform as the definitive space for the future of digital interaction. This isn’t just another piece of Apple accessories news; it’s a glimpse into a future where the boundary between our physical and digital creative spaces disappears entirely.