Unlocking the Next Dimension of Creativity: The Case for an Apple Pencil for Vision Pro

Apple Vision Pro has redefined the boundaries of personal computing with its revolutionary spatial interface, driven by an incredibly intuitive combination of eye, hand, and voice control. This “magical” input method has set a new standard for navigating digital content overlaid on the physical world. However, for all its innovation, the current system presents limitations for tasks demanding high fidelity and precision. The very nature of “look and pinch” can be challenging for intricate creative and professional work. This is where the latest Apple Vision Pro news takes a fascinating turn, with mounting speculation and patent filings pointing toward a dedicated high-precision input device: a spatial Apple Pencil.

This potential accessory is more than just a peripheral; it represents a fundamental evolution for the platform, promising to transform the Vision Pro from a world-class consumption and communication device into an indispensable tool for creators, designers, and engineers. Just as the original Apple Pencil unlocked the true potential of the iPad for artists and note-takers, a spatial stylus could bridge the gap between intuitive navigation and professional-grade creation in three dimensions. This article delves into the technical underpinnings, potential applications, and profound implications of an Apple Pencil for Vision Pro, exploring how it could reshape workflows and further solidify the integrated Apple ecosystem news narrative.

The Limits of Hands-On Spatial Computing

The current Vision Pro interface is a marvel of engineering. The ability to select an object simply by looking at it and pinching your fingers is seamless and requires almost no learning curve. It’s perfect for launching apps, browsing the web, and arranging windows in your space. However, when precision is paramount, challenges emerge. Artists attempting to sketch in a 3D space may find their hand movements lack the sub-millimeter accuracy needed for detailed work. Architects trying to adjust a virtual model might struggle with precise vertex manipulation. This is the “last mile” problem of spatial input, where broad gestures fall short of the fine motor control a dedicated tool can provide. This is a recurring theme in Apple AR news, where the hardware often outpaces the nuance of its control schemes initially.

Learning from the iPad and Apple Pencil Legacy

To understand the potential impact, we only need to look at recent iPad news. The iPad was a revolutionary touch device, but it was the launch of the Apple Pencil that cemented its place in the creative professional’s toolkit. The Pencil introduced pressure and tilt sensitivity, palm rejection, and pixel-perfect accuracy that fingers simply could not replicate. It transformed the iPad into a digital canvas, a notebook, and a design tablet. A Vision Pro Pencil would follow this exact trajectory. It would not replace the hand-and-eye interface but augment it, providing a “pro” option for users who need to go beyond navigation and into deep creation. This move would be a classic Apple strategy, akin to how AirPods Pro offer a more advanced experience for audio enthusiasts, a constant theme in AirPods Pro news.

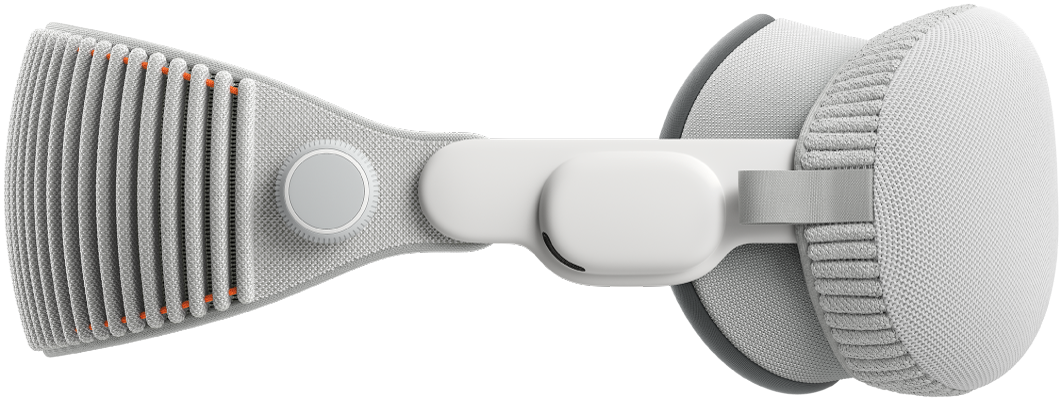

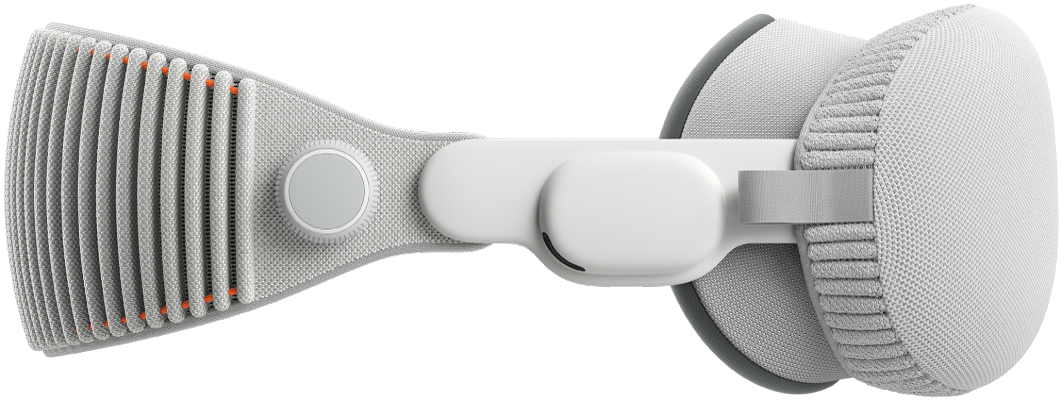

More Than a Stylus: A True “Vision Pro Wand”

Recent patent filings suggest Apple is thinking beyond simply porting the existing Pencil technology. The concept leans more towards a “Vision Pro wand,” a device purpose-built for 3D space. This is a significant development in Vision Pro accessories news. Such a device would likely incorporate 6-degrees-of-freedom (6DoF) tracking, allowing it to understand its exact position and orientation in the room. It could feature integrated haptic engines to simulate the texture of a virtual surface or the click of a button, and might even include physical buttons or a small track surface for secondary controls, which could be customized via upcoming iOS updates news.

Technical Breakdown: The Engineering Behind a Spatial Stylus

Creating a stylus that operates with precision in a three-dimensional, untethered environment is a significant engineering challenge. It requires a symphony of sensors, low-latency communication, and deep integration with the visionOS platform. A potential Apple Pencil for Vision Pro would need to solve several key technical hurdles to deliver the seamless experience users expect from the Apple ecosystem.

Advanced Tracking and Positional Awareness

The core challenge is tracking the stylus in 3D space with near-zero latency and perfect accuracy. Apple has several proven technologies it could leverage to achieve this:

Inside-Out Optical Tracking: The most straightforward approach would be for the Vision Pro’s extensive array of external cameras to track the stylus visually, much like it tracks the user’s hands. This method would require the stylus to have a distinct shape or embedded LEDs (visible or infrared) for the cameras to lock onto reliably.

Sensor Fusion with IMUs: To supplement optical tracking and handle moments where the stylus is occluded from the headset’s view, it would almost certainly contain an Inertial Measurement Unit (IMU), including an accelerometer and a gyroscope. This data, when fused with optical data, provides a robust and uninterrupted tracking signal.

Ultra-Wideband (UWB) Integration: Drawing from technology seen in AirTags and recent iPhones, the stylus could feature a UWB chip. This would allow the Vision Pro to know the stylus’s precise location relative to the headset with centimeter-level accuracy, providing another layer of redundancy and precision. This ties into the broader narrative of AirTag news and Apple’s investment in spatial awareness technologies.

Haptics, Pressure, and Multi-Dimensional Input

The magic of the Apple Pencil on iPad is its ability to translate physical actions into digital strokes. A spatial version would need to expand this concept into the Z-axis.

Pressure Sensitivity: This could control the thickness of a 3D brush stroke, the depth of an extrusion in a modeling program like Maya or Blender, or the force applied to a virtual object.

Analyzing – 6 Key Questions To Effectively Analyze Your eLearning Course … Tilt and Roll Sensitivity: The angle at which the user holds the stylus could change the shape of a tool, for example, switching from a fine-point pen to a broad chisel tip by tilting it. The roll, or rotation, of the stylus could be used for actions like turning a virtual screwdriver or adjusting a dial.

Advanced Haptics: A sophisticated haptic engine could provide feedback that simulates drawing on different surfaces—the rough texture of canvas, the smooth glide of glass, or the resistance of sculpting digital clay. This tactile feedback is critical for making the digital interaction feel physical and intuitive.

Seamless Connectivity and Power Management

Any new accessory must adhere to Apple’s high standards for ease of use. Connectivity would likely be handled by a custom low-latency wireless protocol built on Bluetooth, ensuring the digital ink appears exactly where the user intends with no perceptible delay. Power management is also key. We can expect a solution similar to the current Apple Pencil, perhaps with magnetic charging by attaching it to the Vision Pro’s external battery pack or a dedicated charging case, ensuring it’s always ready for a creative session. The entire process, from pairing to charging, would be designed to be frictionless, a hallmark of the user experience across the latest iPhone news and Apple Watch news.

Transforming Workflows: Real-World Applications and Ecosystem Integration

The introduction of a high-precision spatial stylus would catalyze a wave of innovation, enabling new applications and workflows that are currently impractical or impossible with hand tracking alone. It would firmly position the Vision Pro as a serious tool for a wide range of professional industries.

A New Era for Creative Professionals

For artists, designers, and engineers, a Vision Pro Pencil would be a game-changer. Here are some concrete examples:

Case Study: 3D Digital Sculpting: A character artist for a video game company could don a Vision Pro and use the spatial stylus to sculpt a high-polygon model as if it were a physical block of clay floating in their office. Haptic feedback would simulate the tool’s interaction with the material, while pressure sensitivity would control the depth and intensity of each carve and smooth operation. This hands-on, intuitive process would be far more natural than using a mouse and keyboard.

Architectural Visualization and Annotation: An architect could lead a client on a virtual walkthrough of a building design. Using the stylus, they could make real-time modifications, such as moving a wall, changing a material texture, or leaving precise 3D annotations in the space for their team to review later. This is the ultimate evolution of the concepts seen in iPad vision board news.

Spatial Painting and Illustration: A digital painter could create room-scale art installations, using the stylus to paint with light, texture, and color in three dimensions, creating immersive works that viewers could walk through and experience from any angle.

Revolutionizing Enterprise, Education, and Health

The impact extends far beyond the creative arts. In enterprise and specialized fields, the combination of spatial computing and precision input opens new doors.

Medical and Surgical Training: As an important part of ongoing Apple health news, medical students could use a haptic-enabled stylus to perform complex simulated surgeries. The device could provide realistic feedback, mimicking the resistance of tissue and bone, providing a safe and repeatable way to hone critical skills.

Complex Data Interaction: Scientists and financial analysts could visualize and interact with complex, multi-dimensional data sets. A stylus would allow them to precisely select data points, draw connections, and manipulate models in a way that is far more intuitive than 2D graphs.

Deep Integration with the Apple Ecosystem

A Vision Pro Pencil wouldn’t exist in a vacuum. Its greatest strength would be its integration with macOS, iPadOS, and iOS. Imagine starting a 2D sketch on an iPad with an Apple Pencil, then seamlessly “pulling” that sketch into the Vision Pro as a 3D object to be manipulated with the spatial stylus. This kind of Handoff and Universal Control functionality is central to the Apple ecosystem news narrative. Voice commands via Siri could be used to change tools or colors on the fly (“Hey Siri, switch to the airbrush tool”), further streamlining the creative process and making the latest Siri news directly relevant to pro workflows. All of this would, of course, be built on Apple’s foundation of user privacy, a cornerstone of Apple privacy news and iOS security news.

Strategic Implications and Potential Challenges

While the potential is enormous, introducing a new primary input device for a flagship product carries strategic weight and potential pitfalls. Apple’s approach will be carefully considered to enhance, not complicate, the Vision Pro experience.

The Competitive Landscape

Competitors like Meta have long used controllers as the primary input for their VR headsets. However, these are often designed with gaming as the first priority. Apple’s “Vision Pro wand” would likely be positioned differently—not as a game controller, but as a precision tool for productivity and creativity. This focus on pro-level accuracy and build quality, combined with deep software integration, would be Apple’s key differentiator, much like the Apple TV 4K differentiates itself with ecosystem features, a frequent topic in Apple TV marketing news.

Best Practices and Developer Considerations

For developers, the key will be to treat the stylus as an enhancement, not a requirement.

Common Pitfall: A critical mistake would be for developers to create apps that *require* the stylus, thereby fragmenting the user base and undermining the core hand-tracking interface. The “magic” of Vision Pro is its controller-free nature.

Best Practice: The best applications will be fully navigable with hands and eyes but will unlock a deeper level of precision and functionality when a stylus is detected. For example, a modeling app might allow users to move and scale large objects with their hands but require the stylus for fine-detail sculpting.

Apple’s Product Philosophy: A Lesson from the iPod

Looking at Apple’s history provides context. Many remember the focused simplicity of devices like the iPod Classic or iPod Nano. The constant hum of iPod revival news speaks to a nostalgia for devices that do one thing exceptionally well. This philosophy is alive in Apple’s accessories. The Apple Pencil does one thing: precision input. A HomePod mini is focused on great sound and smart home control. A Vision Pro Pencil would follow this doctrine. It wouldn’t try to be a universal controller; it would be the absolute best tool for spatial precision. This focus is what has always set Apple’s hardware apart, from the earliest iPod Mini news to today’s flagship iPhones.

Conclusion: A Precise Future for Spatial Computing

The prospect of an Apple Pencil for Vision Pro is more than just an exciting rumor; it is the logical next step in the evolution of spatial computing. While the current hand-and-eye tracking system is revolutionary for navigation and general use, a dedicated high-precision stylus is the key to unlocking the platform’s full potential for professional creation. By providing the tactile feedback and sub-millimeter accuracy that creators demand, such a device would bridge the final gap, transforming the Vision Pro from a window into the digital world into a workshop for building it.

As we continue to follow the latest Apple Pencil Vision Pro news, it’s clear that this potential accessory is not merely about adding another way to interact. It’s about deepening the connection between the user and their digital creations, enabling a new generation of artists, designers, and innovators to work in the most natural and intuitive way possible: in three dimensions. The spatial computing era has begun, and a precision stylus may be the tool that truly defines it.